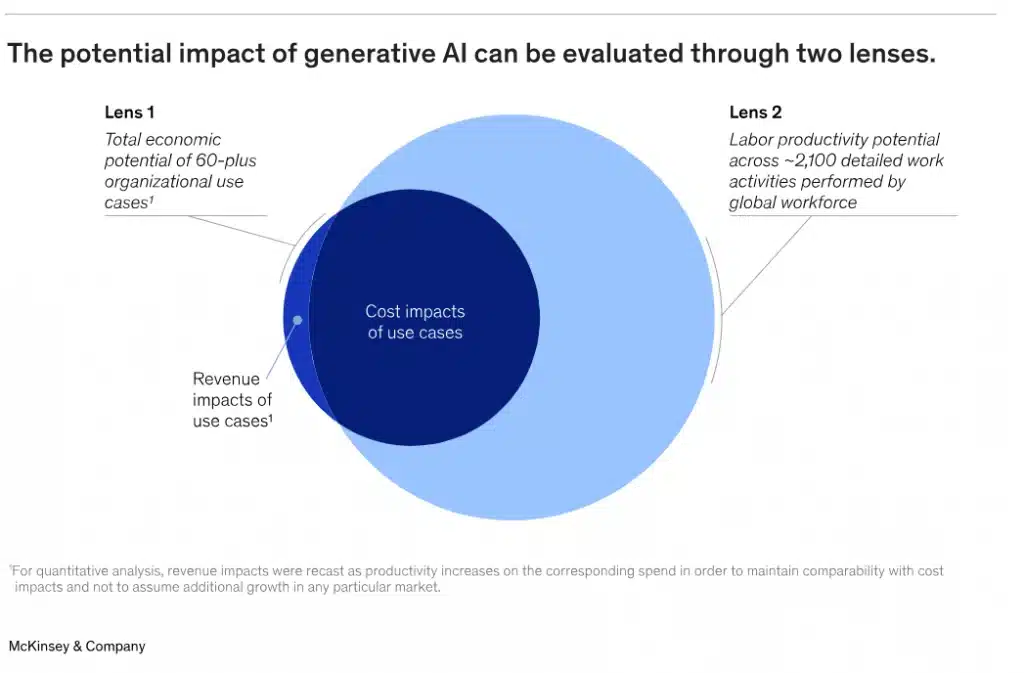

According to the McKinsey report, “The Economic Potential of Generative AI,” the economic potential of generative AI is estimated at between $2.6 and $4.4 trillion annually, equivalent to approximately 4–8% of global GDP.

Compared to previous estimates, the global impact of AI on the economy is growing by 15–40%, thanks to the integration of gen-AI into everyday software.

Updated on July 14th 2025

Estimated reading time: 7 minutes

Table of contents

Impact of GENERATIVE AI

According to McKinsey, the development of generative AI would have a significant impact on production in various industrial sectors (including banking, high-tech, and biotechnology) and would help increase labor productivity, partially offsetting the decline in employment and contributing to economic growth.

In particular, four business functions will benefit from the productivity gains fostered by automation: Customer Operations, Marketing and Sales, Software Engineering, and Research and Development.

In Customer Operations, for example, generative AI could reduce the number of support requests handled by agents by 50%, while in Sales and Marketing, the contribution of generative AI to content creation would contribute to an increase in productivity between 5 and 15%.

In Software Engineering, the use of generative AI in code development, debugging, and refactoring would increase productivity between 20 and 45%.

Impact examples

- Customer operations – automation of up to 50% of interactions, already automated in many banks, telecoms, and utilities.

- Marketing & Sales – productivity increase of 5% to 15%, with a 3–5% increase focused on global sales.

- Software Engineering – boost of 20% to 45% thanks to AI-assisted code generation, refactoring, and debugging.

- R&D – optimizations in design and material selection, as well as in manufacturing, with an indirect impact on costs.

Overall, McKinsey estimates that labor productivity could grow between 0.1% and 0.6% annually until 2040, and with the cumulative effect of all technologies, the productivity increase could reach 0.5–3.4 percentage points annually.

Risks and Limitations of Generative AI

The IDC research “Generative AI in EMEA: Opportunities, Risks, and Futures” identifies three main aspects of generative AI where challenges and risks lie: the intrinsic capabilities (and limitations) of the systems, the processes by which they are created, and the ways in which they can potentially be used.

For example, “Capabilities” identify risks related to AI systems’ “hallucinations,” bias, and the production of generic content, while “Use” highlights exposure to the risk of plagiarism, misinformation, fraud, and unplanned behavior.

The importance of control

AI based on large linguistic models “knows how to speak, but doesn’t know what it says.”

In technical jargon, we speak of “hallucinations” when AI returns distorted or completely fabricated information when it can’t find answers.

This is why it’s important to maintain human control not only during model training but also when verifying the generated output.

Among the main limitations of ChatGPT, for example, are:

Risk of bias: ChatGPT is trained on a large set of text data, and this data may contain distortions or biases. This means that AI can sometimes generate responses that are unintentionally biased or discriminatory.

Limited knowledge: Although ChatGPT has access to a large amount of information, it cannot access all the knowledge that we humans possess. It may not be able to answer questions on very specific or niche topics and may not be aware of recent developments or changes in certain fields.

However, as risky as it may be, the use of Large Language Models in business interactions will quickly become inevitable.

Consumer Mistrust

A Gartner report (June 2025) highlights that 60% of consumers distrust Gen-AI, concerned about issues of accuracy, context, and transparency. Only 40% consider it reliable. Companies are therefore adopting a dual strategy: immediate operational improvements (chatbots, content automation), and the development of new business models.

IDC (2025) also continues to warn of significant risks: hallucinations, bias, generic content, plagiarism, misinformation, fraud, and unexpected behavior. Furthermore, the expansion of the “deepfake economy” makes generated content increasingly difficult to counter.

What precautions should be taken?

According to Gartner, it is essential that organizations adopt the following best practices:

Ensure that people (employees, customers, citizens) are aware that they are interacting with a machine by clearly labeling the entire conversation.

Activate due diligence and technology auditing tools to track uncontrolled biases and other reliability issues in LLM use.

Protect privacy and security by ensuring that sensitive data is not prompted, derived from LLM use, or used as a learning dataset outside the organization.

How to Implement Generative AI Safely

Here’s how to implement generative AI without risk

- Define objectives and limitations

First, clarify where and why you want to use generative AI: customer service, marketing, HR, product development? Also specify the boundaries of use: what AI can do and what must remain under human supervision.

- Choose reliable and traceable models

Opt for providers that guarantee transparency, clear documentation, traceability of sources, and auditing capabilities. Avoid black-box solutions or those without control over the data generated and received.

- Apply a “human-in-the-loop” approach

AI should not replace, but complement, human intelligence. Always provide human oversight for critical decisions, especially in regulated fields (healthcare, finance, legal, etc.).

- Integrate governance and monitoring tools

Implement dashboards and metrics to monitor accuracy, efficiency, data use, and the presence of bias. Adopt continuous validation policies, regular updates, and quality testing.

- Train your team and communicate transparently

Invest in internal training: people need to understand what AI does, how to interact with it, and how to recognize its limitations. And be transparent with customers and stakeholders: always declare when content has been generated by AI.

Conclusions

Generative AI represents a technological and productive revolution, but it requires a strong push towards accountability, transparency, and governance. Only a structured approach—including consumer trust, adequate oversight, and data protection—can transform potential into real and sustainable value.

The adoption of ethical measures, informed digital citizenship, and robust governance are no longer complementary: they are essential to best manage this new era.

Faqs about generative AI

Generative AI is a branch of artificial intelligence that creates new content, such as text, images, audio, code, or video, starting from textual input or other data. Unlike traditional AI, which simply analyzes data or makes predictions, generative AI produces original output, simulating human creativity. It is based on models such as Large Language Models (LLM), including ChatGPT.

Models like ChatGPT are trained on large volumes of text to learn linguistic structures, meanings, and contexts. When you receive a prompt (a question or instruction), the AI calculates the statistical probability of the words that may follow, generating coherent and contextually appropriate responses. However, it doesn’t actually “understand” the meaning; rather, it simulates comprehension thanks to correlations learned in the data.

It depends on what we mean by “intelligence.” Generative AI lacks awareness, emotion, or intention. It’s very good at mimicking language and responding to complex input, but it doesn’t have consciousness or understand the world like a human. It’s a powerful tool, useful if used wisely, but it requires human supervision and context to be reliable.

Updated on July 14th 2025